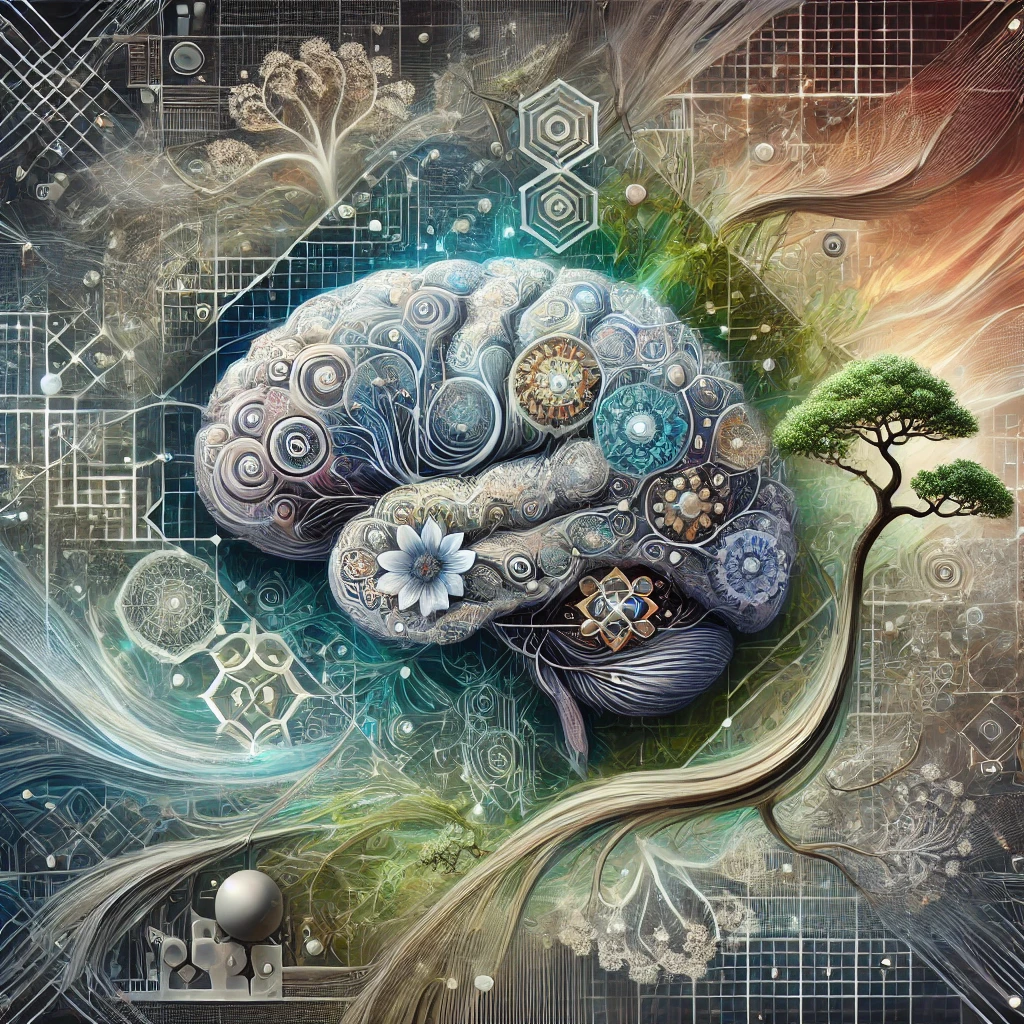

The Future is Artificial Intelligence, and it is Complex.

Many people are discussing Al these days, portraying it as a mysterious, cutting-edge technology that is rapidly taking over the world and transforming modern society. But despite such rapid progress, many of the underlying principles that govern Al—the sciences that underpin it—remain difficult to grasp. The world of algorithms, datasets, networks, stochastic analysis, and so on often overwhelms the reader. To understand the complex world of AI, one must look at another lesser known yet profoundly insightful and fascinating area of study: complexity science.

Complexity Science, on its face, also presents a formidable façade of complex mathematical constructs and intricate theorems, attracting only the most dedicated mathematicians. physicists, etc. But if one is brave enough to travel beyond that façade, one would find that the core ideas of complexity science are surprisingly intuitive and universally applicable not just in AI or science but in every aspect of life. Indeed, the concepts of complexity science are all around us, and once one sees them, then they are difficult to unsee. Complexity science is the key to understanding intelligence—artificial or natural. Complexity science and AI intersect to provide insights into the operation and understanding of complex intelligent systems.

Al does not always present neatly ordered systems with one-to-one causality. Instead, AI often consists of intricate, interconnected networks of components, such as neural networks, sub-systems, datasets, and algorithms, which interact with each other in complex ways. However, order and intelligence emerge from this complex web, often near chaotic connections. With its emphasis on systems that frequently defy linear logic, exist on the brink of chaos, and display emergent behavior, complexity science provides valuable insights into the analysis and understanding of AI systems. It offers tools that can help interpret how individual components of a system—each of them following simple rules—can combine to create something bigger and more fascinating than the individual components.

Al systems often exhibit characteristics that seem similar to those found in the natural world. These characteristics include emergent behavior, self-organization, and complex intelligence. In each of these instances, seemingly random interactions result in ordered patterns or intelligent behaviors. Therefore, we can infer that comprehending AI systems requires acknowledging that intelligence emerges from the interplay of numerous elements, each influencing the others in frequently unpredictable ways while adhering to basic principles. As one explores concepts such as fractals, strange attractors, emergence, networks, etc., which are fundamental in the study of complexity science, parallels begin to emerge that can help in making sense of how AI systems learn, adapt, and evolve. This essay explores a few of the principles and concepts of complexity science that assist in the design and development of AI systems and advance AI adoption and evolution in the modern world.

The whole is greater than the sum of its parts has become a common maxim. However, researchers first mentioned this concept when they were studying complex systems found in nature. One concept in Complexity Science, fractals, exemplifies this adage. In the simplest of definitions, fractals are geometrical patterns that repeat at different levels and scales. Nature is the easiest place to observe fractals, as they manifest in endlessly repeating patterns like tree branches, cloud shapes, coastlines, and mountain ranges. What is intriguing about fractals is that they appear the same irrespective of whether one zooms in or zooms out to observe them. It is this concept of self-similarity that finds use in the realm of AI.

AI systems too can exhibit self-similarity at various levels of functioning. A common instance of this is the processing of information by neural networks, which are the backbone of AI systems. Layers upon layers of neural networks construct AI systems, with each layer processing information. What starts as a simple pattern on one layer becomes increasingly complex as it traverses deeper layers. In the same way, a twig's pattern evolves into a branch's, a tree's, and ultimately, the entire forest's pattern. Deep learning in neural networks builds complex understanding from simple bits of data. An AI system's fractal-like ability to break down a problem into smaller, self-similar pieces equips it to handle extremely large data sets and complex problem spaces. An AI system could not have comprehended the complexity of the real world without this fractal-like information processing. For example, a complex process of image processing begins with the AI system first recognizing simple features, such as edges, colors, and contours. The AI system then builds on these simple patterns to process more details, eventually learning to analyze and recognize scenes, objects, faces, and so on. Such an approach in AI is known as hierarchical learning, where the system comprehends complex data by constructing simple patterns, and each layer of AI processing the data functions as a fractal, not only enhancing formidable power and complexity but also facilitating easy scaling.

Emergence, a concept of great interest in complexity science, manifests in all complex systems and serves as their most distinctive feature. A complex system exhibits emergence, an unexpected behavior resulting from the interaction of its components. These behaviors can be complex and often unpredictable. Once again, observing a flock of birds exemplifies this concept of complexity science most easily. In a flock of birds, no leader directs them. Instead, all birds act independently and yet follow simple rules of interaction, such as maintaining a distance with other birds in the flock. As a result, the entre flock behaves like a cohesive unit and appears almost fluidic and organic. Deep learning, multi-agent systems, swarm intelligence, and other AI systems also exhibit emergence. In all these AI systems, its components or sub-systems follow simple rules of engagement, act independently, and yet give rise to complex behaviors and outcomes. For example, in Swarm Intelligence, many small agents follow simple rules but collectively solve complex problems such as route optimization. Thus, the agents collectively accomplish a goal that would have been far beyond what a single agent could achieve. This same pattern repeats in deep learning, where each neuron of the neural network performs a simple task, but together they perform complex tasks such as image recognition, natural language processing, or creative problem solving. This emergent property of AI systems is what gives them their power. However, this emergent property is also a cause for concern. Emergence can lead to breakthrough solutions but also unintended consequences. AI systems can uncover patterns that even the designers did not anticipate. These unintended consequences can result in bias or algorithmic abnormalities, which can have far-reaching effects. For instance, consider the scenario where a facial recognition system provides recommendations based on its findings. If the AI system detects deep-rooted bias in the training data, it may establish a new rule and recommendation, which may not be correct or ethical. It is thus important to understand the concept of emergence in complex systems so that, when designing AI systems, what they eventually deploy aligns with human values and ethics.

Emergence is unexpected behavior that results from component or agent interactions in complex systems. Complex systems are also adaptive because they can change or reorganize in response to external conditions. Thus, we can infer that emergence equips complex systems to adapt to changing conditions. This adaptability allows the systems to maintain a balance between order and randomness, which are another key concept in complexity science. AI systems also adapt to changing environments by constantly learning and evolving. The delicate balance between order and randomness becomes an important design element in any AI system. Order and randomness guarantee the system's adaptability and functionality. Complexity science has observed that randomness fosters adaptation and evolution in a complex system by encouraging variations. Randomness in AI design allows the system to navigate complex problem spaces without becoming stuck in suboptimal solutions. For instance, we introduce randomness during the training of an AI model to prevent it from overspecializing on the training data. Overfitting, a practice in machine learning, refers to this specialization on training data. Overfitting can be counterproductive as it causes the model to become overly accustomed to the training data, making it incapable of handling new inputs or previously unseen data, thereby hindering its ability to tackle new problems. Thus, randomness helps in balancing between precision and the ability to accommodate new unknown inputs because these random tweaks allow the model to explore different solutions.

Randomness is also important in optimizing algorithms, especially genetic algorithms, where randomness in the form of mutation introduces variation and diversity and can help the overall system from prematurely settling with suboptimal solutions. This balance between optimization and exploration ensures that the Al system can easily navigate complex problem spaces through the continuous exploration of new possibilities. Randomness in neural networks is important to maintain the balance between rigidity and flexibility. Rigidity means the preservation of previously acquired knowledge, while flexibility means the ability to explore and acquire new knowledge. AI systems design themselves to explore and navigate vast and complex data in search of solutions. In such situations, fluctuations or noise in the data may nudge the system to gravitate towards suboptimal solutions, but the introduction of randomness during the training phase can fortify the model and equip it to explore further and find the best possible solution. All complex systems adapt and evolve due to this balance between order and randomness. AI design must incorporate this understanding of the balance between order and randomness, given its status as a complex system.

Information can be considered the foundation of the Al system. Similarly, in complexity science, information is the key building block of any complex system. Information flows through the complex system, enabling it to learn, adapt, and evolve. In the realm of AI, data is the lifeblood of any AI system. Any AI algorithm feeds on data, and its storage, processing, and transmission methods determine the system's success or failure. Algorithms use the embedded information in massive datasets to identify patterns, make predictions, and adapt over time. This underscores the importance of data quality in AI, as only high-quality data can extract meaningful information. Excessive noise in the dataset can lead to misleading results, while insufficient variation can render the AI model rigid and insensitive to new inputs. The Al systems, like the brain, process and transform information as it passes through various layers of neurons. In this way, the system learns complex patterns and thus makes better decisions. Thus, the flow of information through the neural network is crucial to maintaining the system's adaptability and intelligence.

If information serves as the foundation of AI systems, then the structure and design of networks can be viewed as the infrastructure of these systems. Multiple agents interact with each other and the environment in complex systems. We can view this collection of agents as networks, with each agent or sub-system functioning as a node, and each interaction serving as a link or edge within the network. Neurons in the brain, individuals in a social network, and intricate systems all exhibit a common pattern, signifying a network of interconnected components. It is through this network that information flows. Therefore, we can infer that the network's structure or topology significantly influences its resilience, robustness, and ability to evolve. Layers upon layers of interconnected agents form the core of AI systems. It is this network that stores, processes, modifies, and transmits information. Our network determines how well the AI system learns from experiences and improves. These networks in AI systems also demonstrate other characteristics that are similar to complex systems, such as self-organization. In AI systems, connections between elements become stronger or weaker based on the data. Positive outcomes (feedback) reinforce the learning, while negative outcomes present the opportunity to discard the pattern and search for a new one. Similar to complex systems, AI models decentralize decision-making across various network nodes. Multi-agent AI systems also demonstrate a similar behavior. This suggests that studying complex systems and their underlying networks significantly influences the analysis and design of AI systems, including artificial neural networks. Accurately designing the topology of networks that create AI systems is important to optimize their performance and ensure their adaptability in complex environments.

In conclusion, complexity science fosters the development of practical tools, concepts, and frameworks for the analysis and design of Al systems. The ideas and principles of complexity science make it easy to understand intelligence, which is more than just a chain of events; it is made up of many complex interactions between separate, independent parts. Using these tools, designers can design systems that are resilient, adaptable, and capable of solving problems that mankind faces. These insights from complexity science will play a crucial role in guiding the design of AI systems that can meet the challenges of the modern world while maintaining alignment with human values, ethics, and morality.